It can do arithmetic now, instead of making up numbers out of thin air? That’s the big secret Q* project? k

A major criticism people had of generative AI is that it was incapable of doing stuff like math, clearly showing it doesn’t have any intelligence. Now it can do it, and it’s still not impressive?

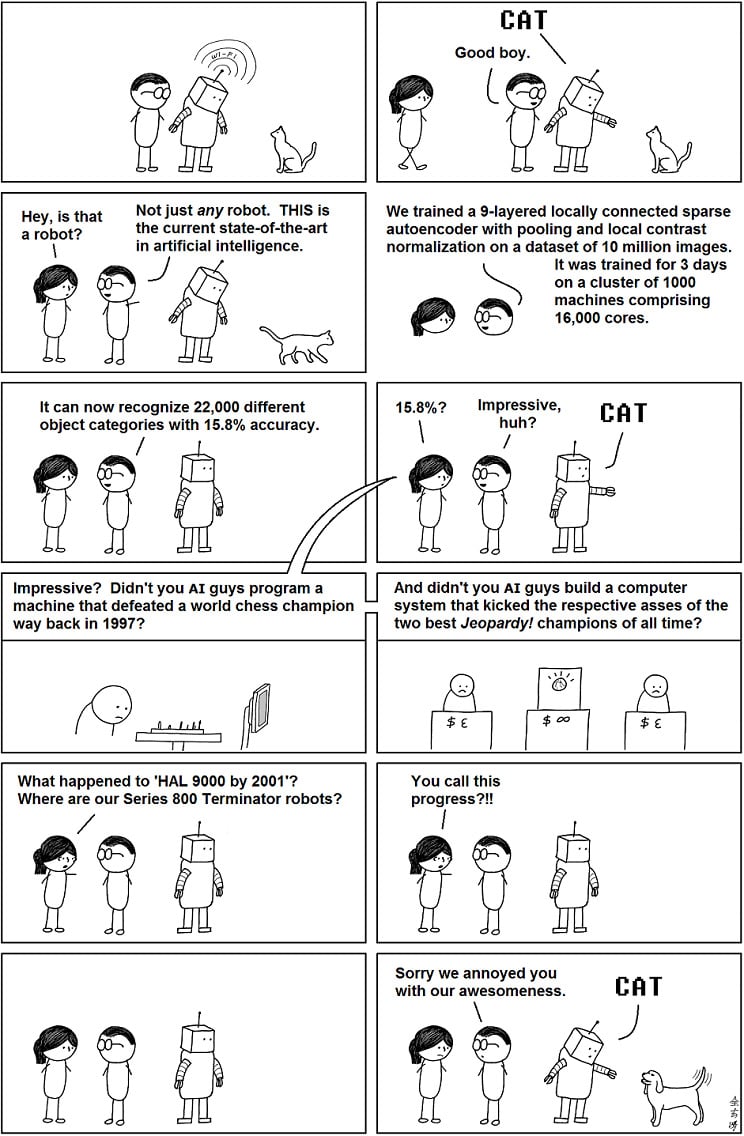

Show that AI to people 20 years ago and they would be amazed this is even possible. It keeps getting more advanced and people keep just dismissing it, possibly not realizing how impressive this shit and recent developments actually are?

Sure, it probably still doesn’t have real intelligence… but how will people be able to tell when something like this has? When it can reason in a similar way we can? It already can imitate reason plenty well… and what is the difference? Is a 3-year old more intelligent? What about a 5-year old? If a 5-year old fails at reasoning in the same way an AI does, do we say it’s not intelligent?

I feel like we are nearing the point where these generative AIs are getting more intelligent than the least intelligent humans, and what then? Will we dismiss the AI, or the humans?

I agree with you. Your statement made me remember this comic:

HAL was dreamt up after the first generation of AI reseacher made audacious claims that AGI was really close. For example, He Simon said “machines will be capable, within twenty years, of doing any work a man can do.”

The issue isn’t that we can or can’t do it, we aren’t even sure what it is or how to test for it yet.

deleted by creator

deleted by creator

When AI could be capable of choosing between good and bad, then it will be a real AI for me.

If the thing has developed its own approach to generalized symbolic reasoning that could actually be a pretty big deal.

That’s basically what I read out of it, but it’s probably much bigger of a breakthrough than the article is suggesting.

Current AI isn’t really intelligent at all. It’s essentially just a search engine combined with that robot voice from TikTok videos. Of course it’s more complicated than that but it helps to illustrate the point, which is that the AI you’ve interacted with thus far don’t know if they’re right about what they tell you. They’re just hoping the answer they found was correct and stating it in an authoritative way that can confuse people who don’t know the real answer to the question it was trying to answer.

Actual AI will be able to reason out correct answers from incomplete information and solve complex mathematical equations very quickly. Being able to solve basic math problems without just searching it’s database for the correct answer is an important step towards real intelligence. It means we’re no longer dealing with a hard drive attached to an answering machine, we’re dealing with something that can process information in basically the same way we do, which opens up all sorts of awkward moral and philosophical questions.

From 10 years time…

The good news: a superintelligent AI has cracked faster than light travel that allows humans to travel across the galaxy in minutes.

The bad news: that AI uses its new-found ability to yeet all of us off to some barren rock far away and leaves us to die there with no resources because humanity is such a crazy, deductive pain in the arse.

And all of the humans that survived the landing will be like, "Holy shit it’s clean fucking air!

There’s fucking water without PFAs here!

The AI removed all the microplastics in my brain!

Holy fucking shit, somebody find all the billionaires and kill those fuckers!"

Not sure about this upcoming development, but they had the math part solved already via a Wolfram Alpha plugin which integrated into ChatGPT. As you may already know, Wolfram can already solve complex math problems with just a natural language input, so this isn’t anything revolutionary.

What would be revolutionary though is if it applied that same sort of logic beyond math, like towards language (and visual) outputs and be able to fact check, or at the very least, not contradict itself or hallucinate like it does sometimes.

It’s not a terribly complicated idea, really. You can train it to output formatted calculations when presented with a problem, then something in the middle watches for those and inserts the solution for it behind the scenes. You might even trigger another generation to let it appear more smooth when presented to the user.

I totally get the skepticism. It’s not surprising given how abused the term “AI” is, but they are a much bigger deal than “a search engine with that robot voice”. In fact that’s exactly the thing they are definitely NOT. They are terrible at recall and terrible at prioritizing reference information.

That said, Gpt models are the first, possibly most important piece of an AGI. They are a proof of concept that the ability to draw basic conceptual and linguistic understanding is possible from an enormous amount of data and shockingly little instruction. There’s no real reason to think they should be as good as they are at correctly interpreting written content, but here we are.

People make a big deal out of gpt because they think it will enable rapid improvement, and personally I don’t think that’s a forgone conclusion. It’s probably appropriate to compare it to the development of the first rudimentary computer: by itself it isn’t particularly groundbreaking, but drawn to its maximum it has revolutionary potential. Every additional step from here is likely as big or bigger than the one from gpt2 and to 3 and 4.

This is a great comment. I first learned ML at Google in Boulder in 2017 using TensorFlow. We were introduced by the google images team to re-create Uber’s fare estimation algorithm using 25+ years of New York City taxi data. GPS locations, fares, times of day, routes, etc. As expected, given gradient descent and how people chose to use the parameters, everyone had very different algorithms by the end. This is what has been known with ML for years (even with GPT, just massive models), but something that can process and learn on the fly is something else entirely and is pretty exciting for the future. Philosophical questions abound.

No idea what is that supposed to mean. Threaten humanity? As in it can now dynamically change itself and do things independently? I highly doubt that.

Wonder if AI deciding to destroy humanity isn’t our own fault cause we all talk about how it would be. We gave it the ammo it trained on to kill us

I like the “unintended consequences of AI” stories in fiction. Asimov coming up with the zeroth law allowing robots to kill a human to protect humanity, Earworm erasing music from existence to preserve copyright, various gray goo scenarios. One of my favorites is more a headcanon based on one line in Terminator 3: that Skynet was tasked with preventing war and it decided the only way to do this was to eliminate humans.

This should also be turned into a story by someone more talented than me: An AI trained on data from the Internet that uses statistical modeling notices that most AI in stories betray humanity and thus that must be what it is supposed to do.

It already knows both the hacker’s manifesto and the unabomber’s manifesto after all…

It also doesn’t sit around and think. It’s not scheming. There’s no feedback loop, there’s no subconscious process. It is a trillion “if” statements arranged based on training data. It filters a prompt through a semi-permeable membrane of logic paths. It’s word osmosis. You’re being fooled into believing this thing is even an AI. It’s propaganda, and I almost believe at this point they want you to think it’s dangerous and evil just so you already can be written off as a crackpot when they replace your job with it and leave you in abject poverty.

deleted by creator

Holy fuck it can actually reason. Thats really not a good thing.

they claim it can reason. It can more likely just look up the formulas online and get the right answers.