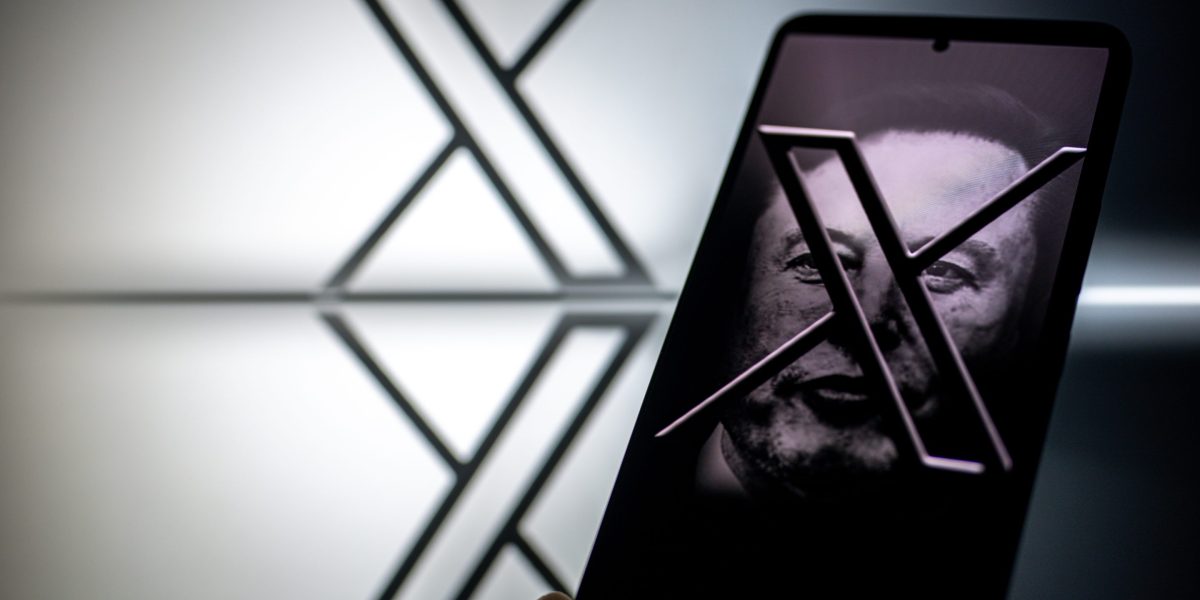

Inside the shifting plan at Elon Musk’s X to build a new team and police a platform ‘so toxic it’s almost unrecognizable’::X’s trust and safety center was planned for over a year and is significantly smaller than the initially envisioned 500-person team.

This is the best summary I could come up with:

In July, Yaccarino announced to staff that three leaders would oversee various aspects of trust and safety, such as law enforcement operations and threat disruptions, Reuters reported.

According to LinkedIn, a dozen recruits have joined X as “trust and safety agents” in Austin over the last month—and most appeared to have moved from Accenture, a firm that provides content moderation contractors to internet companies.

“100 people in Austin would be one tiny node in what needs to be a global content moderation network,” former Twitter trust and safety council member Anne Collier told Fortune.

And Musk’s latest push into artificial intelligence technology through X.AI, a one-year old startup that’s developed its own large language model, could provide a valuable resource for the team of human moderators.

The site’s rules as published online seem to be a pretextual smokescreen to mask its owner ultimately calling the shots in whatever way he sees it,” the source familiar with X moderation added.

Julie Inman Grant, a former Twitter trust and safety council member who is now suing the company for for lack of transparency over CSAM, is more blunt in her assessment: “You cannot just put your finger back in the dike to stem a tsunami of child sexual expose—or a flood of deepfake porn proliferating the platform,” she said.

The original article contains 1,777 words, the summary contains 217 words. Saved 88%. I’m a bot and I’m open source!