And if so, why exactly? It says it’s end-to-end encrypted. The metadata isn’t. But what is metadata and is it bad that it’s not? Are there any other problematic things?

I think I have a few answers for these questions, but I was wondering if anyone else has good answers/explanations/links to share where I can inform myself more.

It says it’s end-to-end encrypted.

Whatsapp is closed source and made by a advertising company. Wouldnt really count on that

Edit: Formatting

Saying they do E2EE but not doing it would be a literal massive scale fraud. Can’t say I put Meta past those behaviors to be fair though lol

But as the other guy said, metadata is already a lot.

They would just say that they have a different definition of E2EE, or quietly opt you out of it and bury something in their terms of service that says you agree to that. You might even win in court, but that will be a wrist slap years later if at all.

No single individual will beat a corporation as large as Facebook in a court battle. You could have all the evidence in the world and they’ll still beat you in court and destroy your life in the process. It took a massive class action lawsuit to hold them accountable for the Cambridge Analytica case, and the punishment was still pennies to them.

Look at the DuPont case. There was abundant evidence that they were knowingly poisoning the planet, and giving people cancer, and they still managed to drag that case on for 30 years before a judgement. In the end they were fined less than 3% of their profit from a single year. That was their punishment for poisoning 99% of all life on planet earth, knowingly killing factory workers, bribing government agencies, lying, cheating, and just all around being evil fucks. 3% of their profit from a single year.

“We just capture what you wrote and to whom before it gets encrypted and sent; we see nothing wrong with that” —Mark Zuckerberg, probably

deleted by creator

They don’t really need the actual contents of your messages if they have the associated metadata, since it is not encrypted, and provides them with plenty of information.

So idk, I honestly don’t see why I shouldn’t believe them. Don’t get me wrong though, I fully support the scepticism.

All they need is the encryption key for the message, and it’s not the message itself.

If they keys are held by them, they have access.

When you log into another device, if all your chat history shows up, then their servers have your encryption key.

This is what I came to express as well. Unless the software is open source, both client and server, what they say is unverifiable and it’s safest to assume it’s false. Moreover, the owning company has a verifiable and well known history of explicitly acting against user privacy. There is no reason to trust them and every reason not to.

Removed by mod

The biggest problem is that it uploads your entire contact list and thus social network to Facebook. That alone tells them a lot about who you are, and crucially, also leaks this information about your friends (whether they use it or not).

With contacts disabled it’s a pain to use (last time I tried you couldn’t add people or see names, but you could still write to people after they contacted you if you didn’t mind them just showing up as a phone number).

It still collects metadata - who you text, when, from which WiFi - which reveals a lot. But if both you and your contact use it properly (backups disabled or e2e encrypted), your messaging content doesn’t get leaked by default. They could ship a malicious version and if someone reports your content it gets leaked, of course, but overall, still much better than e.g. telegram which collects all of the above data AND doesn’t have useful E2EE (you can enable it but few do, and the crypto is questionable).

Is Facebook bad for privacy?

Whatsapp is Facebook. Literally. Whatsapp sold themselves to Facebook.

So yes: it’s bad for privacy.

It’s owned by Meta, you better forget about privacy lol!

TL;DR: Yes it is, it’s terrible. What would you expect from a Facebook product? Use Signal instead.

Thank you, but I’m looking for actual arguments that would sway someone that is trying to come to a rational conclusion. “The reputation of the company is bad” is of course valid evidence, but it would be much more interesting to know what Facebook actually gains from having users on WhatsApp.

First, it is very likely that the WhatsApp encryption is compromised, it definitely shouldn’t be trusted, as it is completely proprietary and thus not transparent to users and independent auditors. Also, unlike Signal, WhatsApp doesn’t encrypt any metadata. The biggest source of WhatsApp user data for Facebook though are address books. When you grant WhatsApp permissions to access your contacts, that data is sent to Facebook servers unencrypted. That way, Facebook can see the names and phone numbers of all of your contacts. This is not just bad for you, it’s also bad for everyone whose phone number you saved in your address book, their data is sent to Facebook, even if they don’t use any Facebook services themselves. Also, when you have WhatsApp or any app installed on your phone, it by default has access to many things that you can’t control or restrict. For example, it can access some unique device identifiers and look at stuff like the list of apps you have installed on your phone or access sensors like the gyroscope and accelerometer which can absolutely be used to track you. It’s better to keep shady apps like those made by Facebook, Google, Amazon, Microsoft or other surveillance corporations off your devices. Use FOSS alternatives with a proven track record like Signal if they are available.

I understand they have access to all this information you listed, but what do they gain from that if I don’t use any (other) Facebook services? Normally, I understand that it allows for better ad targeting, but WhatsApp does not have ads, and if I don’t use any other Meta services that actually serve ads, how could this info being out be a problem for me?

Facebook has your address book, so they have the phone numbers and names of all of your friends, work colleagues, family members and other people you happen to know. They can see your entire social graph. This kind of metadata is extremely valuable. If you just have the phone number of someone in your phone book who at some point becomes a terrorist, you are now also under full investigation. I don’t know about you, I find this scary and dystopian, but unfortunately it’s real. If someone you know does something that’s wrong, you are now also a suspected criminal. Metadata is sometimes even more valuable than the actual data itself. To quote the former NSA director Michael Hayden: “We Kill People Based on Metadata”. Especially since the Snowden leaks we know that we should protect ourselves from corporate/government overreach and surveillance and the best way to do this is avoiding proprietary software. FOSS is superior in any way: It’s built by voluntary individuals who just want to help out other people and try to make the world a better place, it’s transparent to the user and can be verified, you have the freedom to do with it whatever you want. We really shouldn’t be supporting multi-billion dollar corporations lead by weirdos. Did you know that Mark Zuckerberg bought all the land around his house, so that none of his neighbors can see what he is doing for privacy reasons, while he probably caused the biggest invasion of privacy in the last decade? We shouldn’t be supporting such people. We really shouldn’t.

It might be E2EE but it’s not encrypted on your phone and it’s closed source. How do you know they don’t send the conversation data to their company? How do you know they don’t get the encryption keys to decipher the messages for them?

How do you know they don’t get the encryption keys to decipher the messages for them?

My guess is that they just capture keywords before you send it. They don’t need to read the contents of the sent conversation when both parties to the conversation are using an app they own. They can detect keywords before sending, log and report them, then send the message encrypted. No need to retain encryption keys since they already extracted what they want.

Other apps may have code published in a repository, but the path from repository into the Play Store onto my phone is not clear. How do I know that they don’t add extra tracking code on top during the build and release to the Play Store? With for example a popular alternate app, Signal?

You don’t have to use the Play Store. You can either compile Signal yourself or use a trustworthy 3rd party build of Signal. Personally, I use Molly. It’s Signal for Android but with some neat tweaks. It’s not even available on the Play Store, it’s exclusive to F-Droid and Accrescent. You can’t do any of this with proprietary garbage like WhatsApp. Neither can you modify it to add features, nor can you look at the source code or compile it yourself.

Are you really asking about privacy of a Facebook’s app?

Are you going to be flippant or help educate the person?

The answer is: Yes, WhatsApp is bad for privacy.

While the messages itself are encrypted, the WhatsApp App itself can still collect data from you from the Device your using it on:

- Phone number

- operating system

- associated contacts Etc.

And given this is a Meta owned company, we can probably assume they profile you from that.

Your address book is uploaded to Facebook servers when you use Whatsapp. And each time you interact, they know with who and link this information with other profiles and users of the Meta products.

If you’re on Android, the E2E is meaningless as WhatsApp can read what you type, just as the Facebook app can, since they have keyboard access.

I don’t know that they do this, just saying it’s a leak point, and since it’s Meta/Facebook/Zuckerberg, well, let’s just say I’m a bit cynical.

It says it’s end-to-end encrypted. The metadata isn’t. But what is metadata and is it bad that it’s not?

It’s not just that. Their app can easily have tracking components that look for the list of installed apps, how often you charge your phone, how often are you on a WiFi network, etc.

Also, the app and any tracking component it has can also freely communicate on the wifi network. That doesn’t only mean the internet, but the local, home network too, where they can find out (by MAC address, opened ports and response of the corresponding programs) what kind of devices you have, when do you have them powered on, what software you use on it (like do you use any bittorrent client? syncthing? kde connect? lots of other examples?), and if let’s say your smart tv publishes your private info on the network, it does not matter that you have blocked LG (just an example) domains in your local dns server, because facebook’s apps can just relay it through your phone and then their own servers.

If the app’s code has been obfuscated, exodus privacy and others won’t be able to detect the tracking components in it.

Are others different, like Signal and how do I know?

As a normal user I install both in exactly the same way, I have no way to verify that the code of the apk on the play store is exactly the same as the code published by Signal as open-source. How could I trust Signal more?

Are others different, like Signal

Signal’s encryption is sound, but there’s an uncomfortable fact that it uses google play services dependencies (like for maps and other things, I think). There are articles (1, 2) that discuss that it has functionality that may allow an other process (the google play services process) to read the signal app’s state or even directly it’s memory because of that, which can mean the contents of the screen or the in-memory cache of decrypted messages.

Security audits often only audit the app’s own source code, without the dependencies that it uses.

The google play services dependency could have a “flaw” today, or it could grow a new “feature” one day, allowing what I described above.May or may not be connected, that Moxie (signal founder) is vehemently against any kinds of forks, including those that just get rid of non-free dependencies (like the google play services dependencies). The other comments of his are also telling.

Because of these, I have ruled for myself that I’ll not promote them as a better system, and I’ll not install Signal on my phone, because I think it gives a false sense of security, and for other things like still requiring an identity connected identifier (a phone number) for registration.

However if there were people whom I can only reach through Signal, there’s Molly. They maintain 2 active forks, one of which is rid of problematic dependencies, and I would probably use that. Molly-FOSS is not published on the official F-droid repository, but they have their own, so the F-droid app can still be used to install it and keep it updated.and how do I know?

It’s hard, unfortunately, and in the end you need to trust a service and the app you use for it.

F-droid apps are auditable, they are forbidden from having non-free (non-auditable) dependencies, and popular apps available in the official repository are usually fine.

With google play, again the truth is uncomfortable.

On Android, the app’s signing key (a cryptographic key) makes it possible to verify that the app that you are going to install has not been modified by third parties.

Several years ago Google has mandated that all app developers are required to hand in their signing keys, so that google can sign the apps instead of them, basically impersonating them. Unfortunately this also means that unless the app’s total source code is available (along with all the source code of it’s dependencies), it’s impossible to know if google has done modifications to the app that they make accessible on the google play store. This in itself is already a huge trust issue to me, but what is even worse is that they can just install custom modified versions for certain users on a case by case basis, with the same signing key that once meant that it was not modified by third parties like google, and no one will know it ever.Just an example to show that the above is possible: the amazon web store similarly also requires the developer to hand over the app’s signing key, and they admit in the documentation that they add their own tracking code to every published app.

Thank you a lot, this is great information!

That’s what they say.

MetaFacebook already lied before countless times, so who knows.(You can google Facebook lawsuits. The number of the results is scary.)

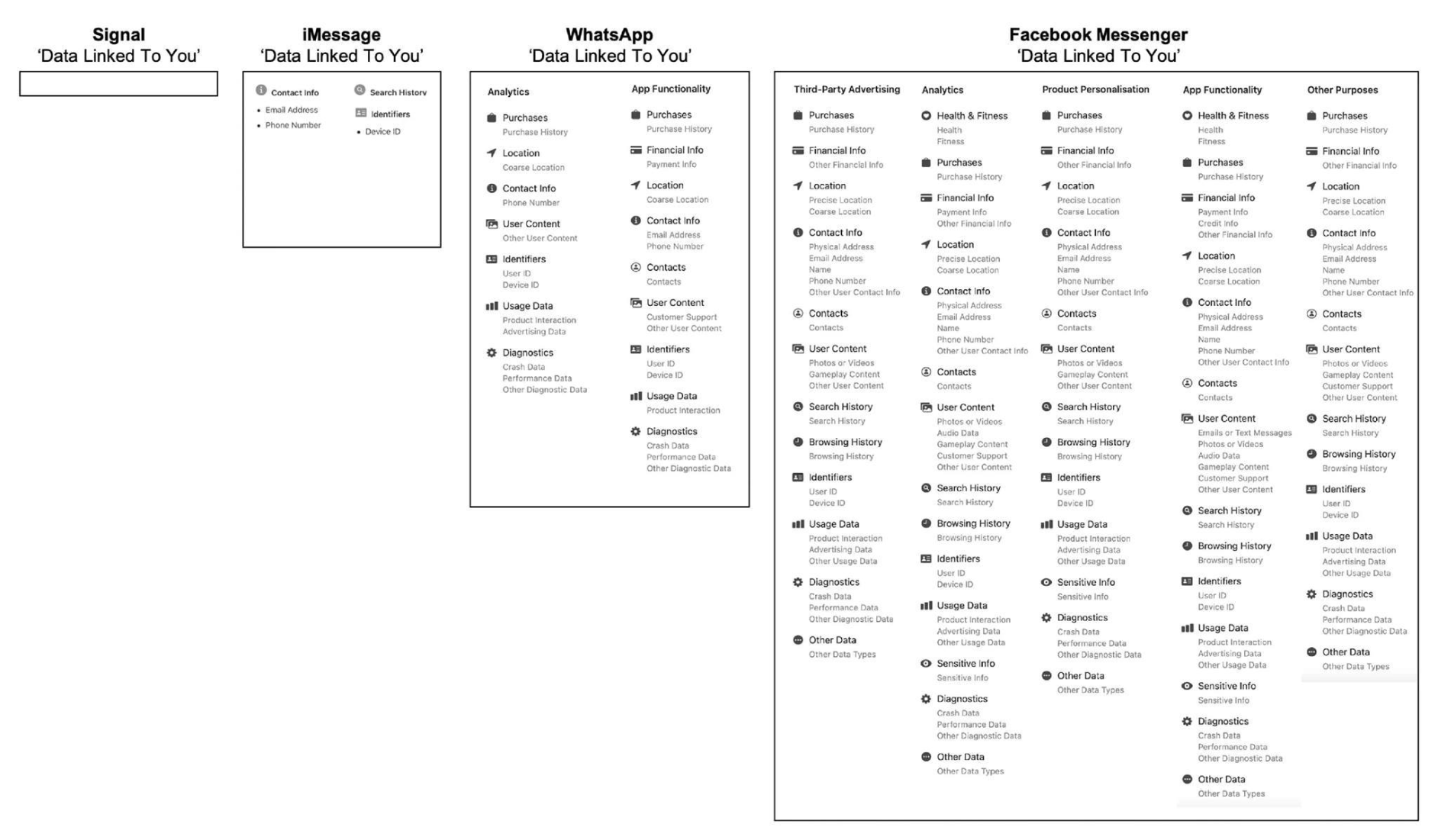

This image somehow is such low quality on my device that I can’t read any of the text on it.

Yes.