The guy seems to be getting increasingly more arrogant. I would rather chat with AI instead of hearing/reading what he says.

“AI will take more and more control over society every day, it never sleeps, it never stops, and is here to stay forever!” / AI

Holy christ he is so high on his own farts.

If you replace my GPU with AI hardware, are you implying that I’m supposed to use this AI hardware to drive my games instead of a GPU? It seems much more plausible that GPU will gain AI capabilities rather than be replaced by AI hardware. Why would I not need a GPU anymore?

This smells more like a big dream to me than an observation in where the industry is going.

They are saying that a hardware AI would be able to replace your GPU’s functionality, so yes, it would be the thing that game developers would use to output pixels to your screen.

It’s a bold statement in a way, but there’s solid reason behind wanting to make this happen.

Generative AI is currently capable of creating images that contain extremely complex rendering techniques. Global illumination, with an infinite number of area light sources, is just kind of free. Subsurface Scattering is just how light works in this model of the world, and doesn’t require pre-processing, multiple render passes, a g-buffer, or costly ray casts to get there. Reflections don’t require screen-space calculations, irradiance probes, or any other weird tricks. Transparency is order independent because it doesn’t make sense for it not to be, and light diffuses or diffracts because of course it does.

Modern high-end GPUs are hacks we have settled on for pushing information into pixels on the screen. They use a combination of a typical raster-based graphics pipeline, hardware accelerated ray tracing, and ai upscaling and denoising techniques to approximate solutions to a lot of these problems.

There are definitely things that developers would need in order for AI hardware to replace GPUs, such as any kind of temporal consistency, and significantly more control over the resulting pixels.

And to get both game developers and consumers to transition over you’ll need it to first be part of even low end GPUs for quite a while.

It’s not a terrible idea at its core. It’s definitely not 5-10 years out, though.

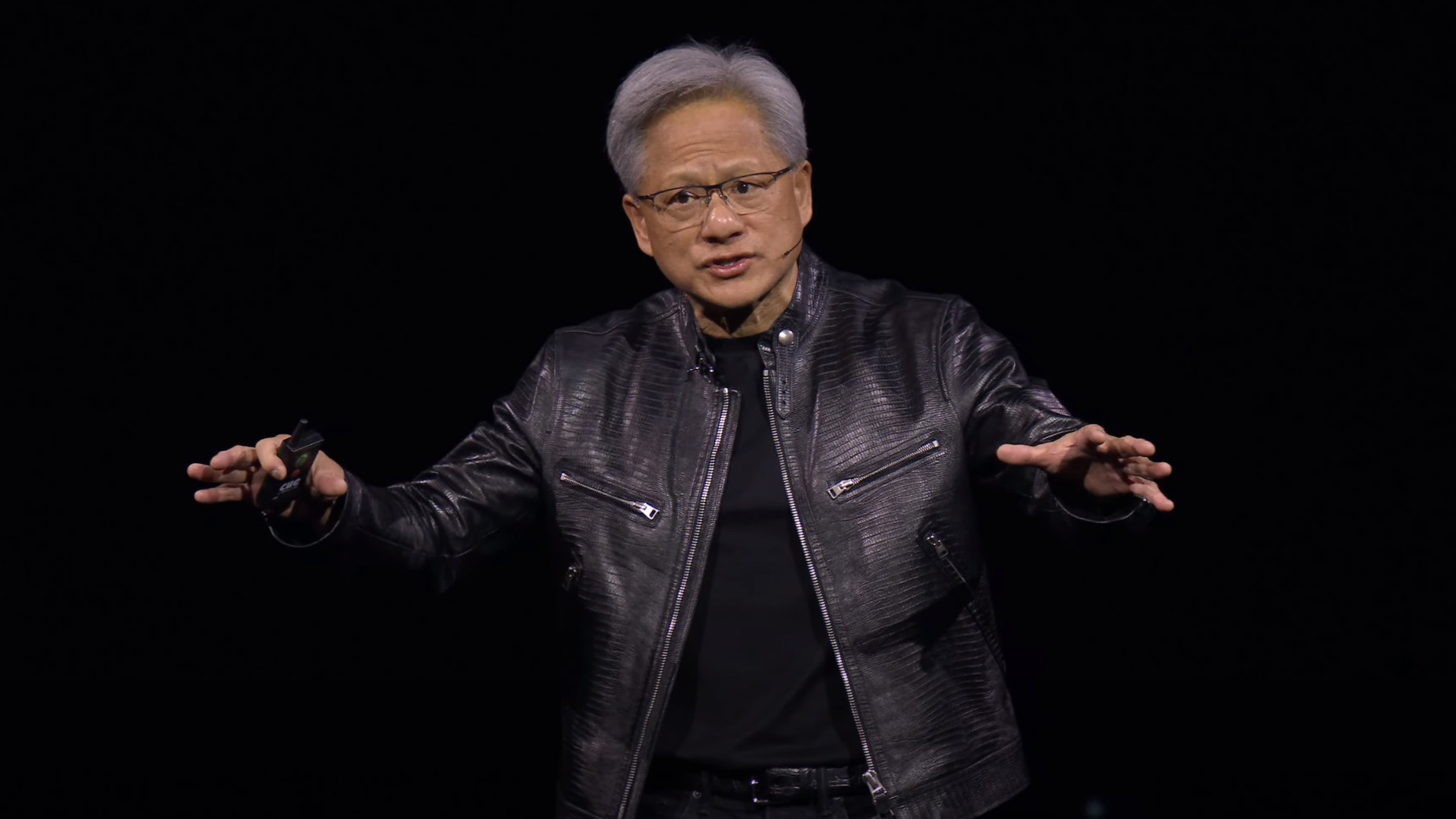

I don’t know it seems that Nvidia knows what they are doing. They are still ahead of the game in AI due to the fact that they were trying to be in it before other people. From what I heard from NPR they are like 5-10 years ahead of everybody else in the AI game. Might be a big dream but if they do what they did with AI, it could be possible.

I don’t even like upscaling and frame generation, feels fake to me. Show me the real graphics as the game artists made them.

Nobody asked for it so rightly fuck off.

AI is starting to give me paperless society vibes.

Or self driving cars. It well get to a point not the point everyone is thinking right now and then stall there. Just like everything else, people overhype in the beginining.

Difference is, I want self driving cars. You can have your ferraris and porches, I want a car that drives me to work while I nap in the back.

Ignoring my wet fantasy for a second though, I get your point and agree. Dare to dream on this one though

Replace? What the fuck is he smoking. Let’s replace gaming with a Roomba while we’re at it.

hes not entirely wrong given the goals and features currently offered.

take for example, DLSS upscales and frame generation are “AI” pixels. Nvidia at the current moment is testing a Nvidia AI assisted form of Auto HDR that in practice is better than Windows implementation in terms of brightness contrast (although its a bit too aggressive in color saturation). Were moving to a point where ai has some influence on each individual pixel in the game.

the compute die on a gpu may just end up surpassing the gpu die size at some point,. making it more of a compute card than a graphics card, similar to how the blackwell reveal die was.

What do you mean specifically with compute unit die being bigger than the gpu? As far as I am aware its one single die bundling gpu + cuda etc cores?

blackwell introduces nvidia into the age of multi chip based dies, so its amatter of time where components will be sepeate in the same sort of way amd gpu dies have seperated i/o. intels gpus are heading down a similar path. currebtly they are pretty monolithic, but as time progresses, the compute aide of the die may be larger than the graphics on die.

especially if the idea is that it may happen from 5-10 years

Nice, thanks. “multi chip die” were the keywords I was missing - finding articles now

And just how much will you be charging for that privilege?

Nothing, at first, and even then you will always have access to the “Free Tier” processing of the hardware!

And what will run the AI that replaces your GPU? GPUs of course (rebranded as “AI accelerators”). So yeah, win-win for team green.