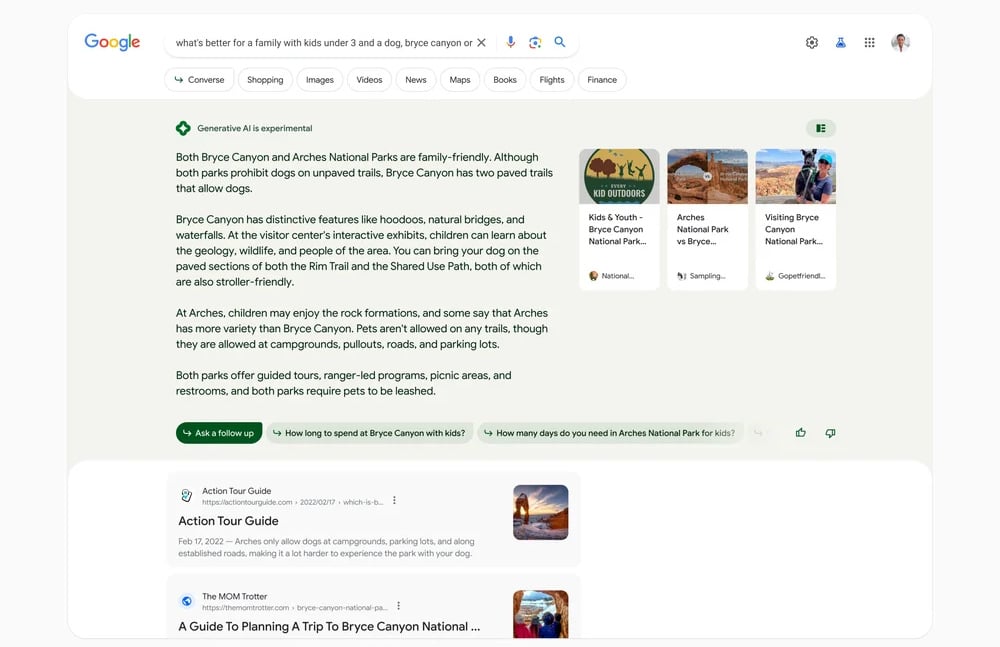

If you’re in the US, you might see a new shaded section at the top of your Google Search results with a summary answering your inquiry, along with links for more information. That section, generated by Google’s generative AI technology, used to appear only if you’ve opted into the Search Generative Experience(SGE) in the Search Labs platform. Now, according to Search Engine Land, Google has started adding the experience on a “subset of queries, on a small percentage of search traffic in the US.” And that is why you could be getting Google’s experimental AI-generated section even if you haven’t switched it on.

Almost every time I ask a direct question, the two AI answers almost always directly contradict each other. Yesterday I asked if vinegar cuts grease. I received explanations for both why its an excellent grease cutter, and why it doesn’t because it’s an acid.

I think this will be a major issue with AI. Just because it was trained on a huge wealth of knowledge doesn’t mean that it was trained on correct knowledge.

Which makes its correct answers and it’s confidently wrong answers look as plausible as each other. One needs to apply real intelligence to determine which to trust, makikg the AI tool mostly useless.

Exactly!

I don’t see any reason being trained on writing informed by correct knowledge would cause it to be correct frequently. unless you’re expecting it to just verbatim lift sentences from training data

deleted by creator

Showing different viewpoints in order to not appear biased. It’s the cornerstone of democracy after all.

😛