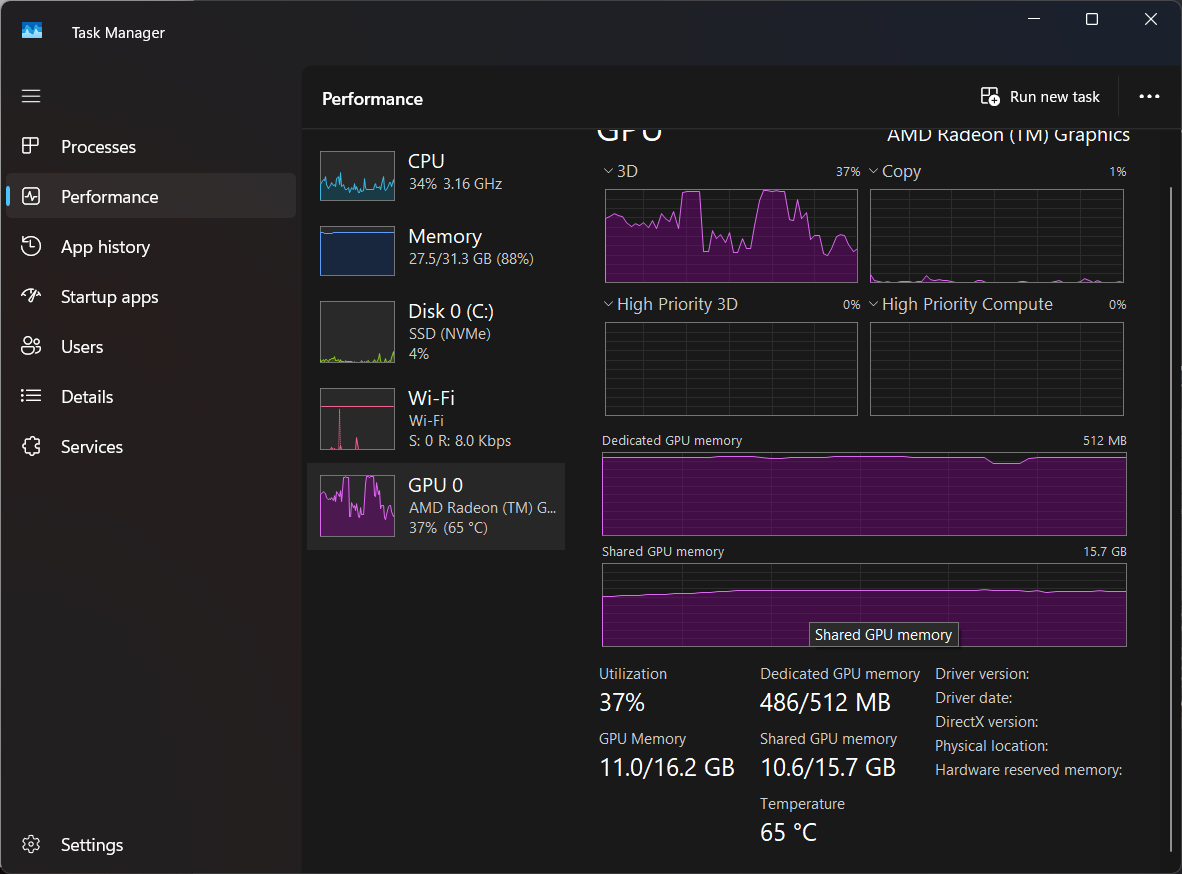

tl:dw some the testing shows 300-500% improvements in the 16GB model. Some games are completely unplayable on 8GB while delivering an excellent experience on the 16GB.

It really does seem like Nvidia is intentionally trying to confuse their own customers for some reason.

To me it sounds like they are preying on the gamer who isn’t tech savvy or are desperate. Just a continuation of being anti-consumer and anti-gamer.

Yup. This is basically aimed at the people who only know that integrated GPUs are bad and they need a dedicated card, so system manufacturers can create a pre built that technically checks that box for as little money as possible.

It really does seem like Nvidia is intentionally trying to confuse their own customers for some reason.

for money/extreme greed

Okay well that’s the low-hanging fruit but explain to me the correlation? How does confusing their customers fuel their greed?

Uninformed buyers will buy the 8GB card get a poor experience and will be forced to buy a new card sooner than later.

So their strategy is making and selling shitty cards at high prices? Don’t you think that would just make consumers consider a competing brand in the future?

For most consumers it might not, the amount of nvidia

propagandaadvertisement in games is huge.Yea I don’t know why buying a shitty product should convince me to throw more money at the company. They don’t have a monopoly, so I would just go to their competitor instead.

It’s like teens and IPhones, they don’t care if they pickup a used 3 year old iPhone for more money than a new Android, they want the iPhone branding

The reviews said that it was a better card than the other brand.

Just imagine how bad those must have been!

They don’t know they’ve been ripped off.

They had trouble increasing memory even before this AI nonsense. Now they have a perverse incentive to keep it low on affordable cards, to avoid undercutting their own industrial-grade products.

Which only matters thanks to anticompetitive practices leveraging CUDA’s monopoly. Refusing to give up the fat margins on professional equipment is what killed DEC. They successfully miniaturized their PDP mainframes, while personal computers became serious business, but they refused to let those run existing software. They crippled their own product and the market destroyed them. That can’t happen, here, because ATI is not allowed to participate in the inflated market of… linear algebra.

The flipside is: why the hell doesn’t any game work on eight gigabytes of VRAM? Devs. What are you doing? Does Epic not know how a texture atlas works?

The flipside is: why the hell doesn’t any game work on eight gigabytes of VRAM? Devs. What are you doing? Does Epic not know how a texture atlas works?

It’s not that they don’t work.

Basically what you’ll see is kinda like a cache miss, except the stall time to go ‘oops, don’t have that’ and go out and get the required bits is very slow, and so you can see 8gb cards getting 20fps, and 16gb ones getting 40 or 60, simply because the path to get the missing textures is fucking slow.

And worse, you’ll get big framerate dips and the game will feel like absolute shit because you keep running into hitches loading textures.

It’s made worse in games where you can’t reasonably predict what texture you’ll get next (ex. Fortnite and other such online things that are you know, played by a lot of people) but even games where you might be able to reasonably guess, you’re still going to run into the simple fact that the textures from a modern game are simply higher quality and thus bigger than the ones you might have had 5 years ago and thus 8gb in 2019 and 8gb in 2025 is not an equivalent thing.

It’s crippling the performance of the GPU that may be able to perform substantially better, and for a relatively low BOM cost decrease. They’re trash, and should all end up in the trash.

That’s what I’m on about. We have the technology to avoid going ‘hold up, I gotta get something.’ There’s supposed to be a shitty version that’s always there, in case you have to render it by surprise, and say ‘better luck next frame.’ The most important part is to put roughly the right colors onscreen and move on.

id Software did this on Xbox 360… loading from a DVD drive. Framerate impact: nil.

the virtual texture tech is not all mighty and you can still run into situation where if the allocation is fewer than you need you run into the page swap. It act similarly to traditional cache miss if you cross certain threshold because you can’t keep enough “tiles” in memory. Texture quality popping and then stuttering is the symptom progressing from lower than needed vram allocated to severely insufficient.

the virtual texture tech is not all mighty and you can still run into situation where if the allocation is fewer than you need you run into the page swap. It act similarly to traditional cache miss if you cross certain threshold because you can’t keep enough “tiles” in memory. Texture quality popping and then stuttering is the symptom progressing from lower than needed vram allocated to severely insufficient.

it is 2019, the 2060ti has 8gb of vram. it is 2020, the 3060ti has 8gb of vram. it is 2023, the 4060ti has 8gb of vram. it is 2025, the 5060ti has 8gb of vram.

My 1080 from 2017 has 8gb of vram. Still works fine.

If you play games that weren’t released after 2022, sure.

Regular GTX 1080 here. Running Space Marine 2 at 60 fps on 1080p on mid-ish settings. And that’s basically the most graphics-intensive games I’ve played recently. Games like Total Warhammer 3, Dark Deity 2, Factorio or Heroes of the Storm don’t care about the GPU and play great at 1080p.

Speaking of, what is the next best cost-efficient GOAT in the generations that followed the GTX 10 series? I’m gonna be needing a new GOAT at some point in the future - would love to hear recommendations.

I’m also interested in the answer to this; as a fellow 1080p gamer, a quick research had me hovering around an Intel A770, the only one offering 16gb at my target price of around €300, but I’m open to suggestions.

you can absolutely run newer titles with a 1080/1080ti. maybe not at 4k max settings, but a little bit of compromise should easily get at least 60fps in most titles.

1080 is likely the all around goat in graphics card history

I am pretty sure you can run Animal Well, at 4k max. It came out last year also.

I was assuming the commenter I replied to was talking about unoptimized AAA games that overly rely on on DLSS.

animal well looks cool tho I’ll have to check it out! love the art style.

I don’t buy AAA, that much anymore. Also, you can play roms, build, play arcade, gamble, list goes on for this game.

My 3060 has 12gb of vram…

You probably have to return the 4gb extra then.

The whole fact that NVIDIA is not allowing AIBs to send the 8GB card to reviewers is quite telling. They are simply banking on illiterate purchasers, system integrators to sell this variant. That’s another low for NVIDIA but hardly surprising anyone.

Planned obsolescence.

I agree, but it is still crazy that there are people out there making $500 plus purchases without the smallest bit of research. I really hope this card fails only for the reason that it deserves to.

Can we get a gpu that just has some ddr5 slots in it?

You would need so many channels for that to be viable.

Speed.

Why not many little simple sockets in which pop as many memory chips as needed?

ddr5 was just a placeholder in the above statement, whatever works. TPTB are welcome to release a line of gpu-ram with appropriate connections.

On the flip-side, every game worth playing uses 2GB vram or less at 1080p.

That’s an absolute lie and you know it.

I don’t even have a GPU, but to be honest I don’t even game anymore cuz I work more hours then there are in a day

Even 8GB is fine to be honest, even at high settings. People are a bit dramatic about it.

I have a game that eats 11 gb of vram on low at 1080p (I play it on windowed). It suffers from some Unreal engine shenanigans and it’s also a few years old.

Just like normal ram, if you have more to use then it’ll get used. It doesn’t mean it requires more than 8gb in order to run well.

I played all of Cyberpunk on high settings with 8gb.

You’re not wrong, but then there’s games like this that need at least 6 gb (more on dx12) to run on low without it running out of memory and either crashing or not launching. This is an actual issue with this particular game.

Edit: Cyberpunk has gotten a lot better though and will run on things it has no business running on.

UE is probably the worst engine ever made, even games from 20 years ago look better than that blurry mess of an engine. I hope nobody makes any game on it anymore, most of them are also badly optimized, never understood why people like that engine.

Same reason people still use Unity after the whole shitfest on “per install tax”: large community, huge knowledge base, tutorials everywhere, professional courses that focus on it.

Kinda ironic that the Unreal Tournament games (99, 2004, 3) were all incredibly optimized.

Video editing and AI require as much VRAM as you can get. Not everyone uses the cards just for gaming.

Then don’t buy the current-gen low-end card for video editing, mate. Get previous-gen with more vRAM, or go AMD.