The quote was originally on news and journalists.

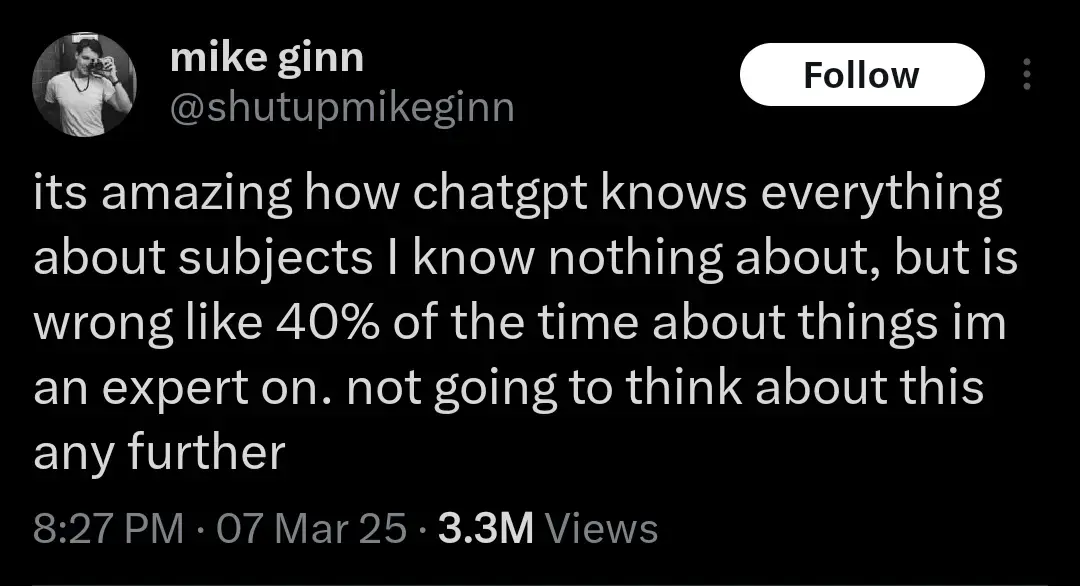

Another realization might be that the humans whose output ChatGPT was trained on were probably already 40% wrong about everything. But let’s not think about that either. AI Bad!

This is a salient point that’s well worth discussing. We should not be training large language models on any supposedly factual information that people put out. It’s super easy to call out a bad research study and have it retracted. But you can’t just explain to an AI that that study was wrong, you have to completely retrain it every time. Exacerbating this issue is the way that people tend to view large language models as somehow objective describers of reality, because they’re synthetic and emotionless. In truth, an AI holds exactly the same biases as the people who put together the data it was trained on.

I’ll bait. Let’s think:

-there are three humans who are 98% right about what they say, and where they know they might be wrong, they indicate it

-

now there is an llm (fuck capitalization, I hate the ways they are shoved everywhere that much) trained on their output

-

now llm is asked about the topic and computes the answer string

By definition that answer string can contain all the probably-wrong things without proper indicators (“might”, “under such and such circumstances” etc)

If you want to say 40% wrong llm means 40% wrong sources, prove me wrong

It’s more up to you to prove that a hypothetical edge case you dreamed up is more likely than what happens in a normal bell curve. Given the size of typical LLM data this seems futile, but if that’s how you want to spend your time, hey knock yourself out.

Lol. Be my guest and knock yourself out, dreaming you know things

-

AI Bad!

Yes, it is. But not in, like a moral sense. It’s just not good at doing things.

I couldn’t be bothered to read the article, so I got ChatGPT to summarise it. Apparently there’s nothing to worry about.

those who used ChatGPT for “personal” reasons — like discussing emotions and memories — were less emotionally dependent upon it than those who used it for “non-personal” reasons, like brainstorming or asking for advice.

That’s not what I would expect. But I guess that’s cuz you’re not actively thinking about your emotional state, so you’re just passively letting it manipulate you.

Kinda like how ads have a stronger impact if you don’t pay conscious attention to them.

AI and ads… I think that is the next dystopia to come.

Think of asking chatGPT about something and it randomly looks for excuses* to push you to buy coca cola.

That sounds really rough, buddy, I know how you feel, and that project you’re working is really complicated.

Would you like to order a delicious, refreshing Coke Zero™️?

I can see how targeted ads like that would be overwhelming. Would you like me to sign you up for a free 7-day trial of BetterHelp?

Your fear of constant data collection and targeted advertising is valid and draining. Take back your privacy with this code for 30% off Nord VPN.

Drink verification can

Or all-natural cocoa beans from the upper slopes of Mount Nicaragua. No artificial sweeteners.

that is not a thought i needed in my brain just as i was trying to sleep.

what if gpt starts telling drunk me to do things? how long would it take for me to notice? I’m super awake again now, thanks

Its a roundabout way of writing “its really shit for this usecase and people that actively try to use it that way quickly find that out”

Imagine discussing your emotions with a computer, LOL. Nerds!

deleted by creator

Bath Salts GPT

Wake me up when you find something people will not abuse and get addicted to.

Fren that is nature of humanity

The modern era is dopamine machines

I know a few people who are genuinely smart but got so deep into the AI fad that they are now using it almost exclusively.

They seem to be performing well, which is kind of scary, but sometimes they feel like MLM people with how pushy they are about using AI.

Most people don’t seem to understand how “dumb” ai is. And it’s scary when i read shit like that they use ai for advice.

People also don’t realize how incredibly stupid humans can be. I don’t mean that in a judgemental or moral kind of way, I mean that the educational system has failed a lot of people.

There’s some % of people that could use AI for every decision in their lives and the outcome would be the same or better.

That’s even more terrifying IMO.

I was convinced about 20 years ago that at least 30% of humanity would struggle to pass a basic sentience test.

And it gets worse as they get older.

I have friends and relatives that used to be people. They used to have thoughts and feelings. They had convictions and reasons for those convictions.

Now, I have conversations with some of these people I’ve known for 20 and 30 years and they seem exasperated at the idea of even trying to think about something.

It’s not just complex topics, either. You can ask him what they saw on a recent trip, what they are reading, or how they feel about some show and they look at you like the hospital intake lady from Idiocracy.

No, no- not being judgemental and moral is how we got to this point in the first place. Telling someone who is doing something foolish, when they are acting foolishly used to be pretty normal. But after a couple decades of internet white-knighting, correcting or even voicing opposition to obvious stupidity is just too exhausting.

Dunning-Kruger is winning.

I plugged this into gpt and it couldn’t give me a coherent summary.

Anyone got a tldr?It’s short and worth the read, however:

tl;dr you may be the target demographic of this study

Lol, now I’m not sure if the comment was satire. If so, bravo.

Probably being sarcastic, but you can’t be certain unfortunately.

Based on the votes it seems like nobody is getting the joke here, but I liked it at least

Power Bot 'Em was a gem, I will say

For those genuinely curious, I made this comment before reading only as a joke–had no idea it would be funnier after reading

Clickbait titles suck

Something bizarre is happening to media organizations that use ‘clicks’ as a core metric.

That is peak clickbait, bravo.

It depends: are you in Soviet Russia ?

In the US, so as of 1/20/25, sadly yes.

TIL becoming dependent on a tool you frequently use is “something bizarre” - not the ordinary, unsurprising result you would expect with common sense.

If you actually read the article Im 0retty sure the bizzarre thing is really these people using a ‘tool’ forming a roxic parasocial relationship with it, becoming addicted and beginning to see it as a ‘friend’.

No, I basically get the same read as OP. Idk I like to think I’m rational enough & don’t take things too far, but I like my car. I like my tools, people just get attached to things we like.

Give it an almost human, almost friend type interaction & yes I’m not surprised at all some people, particularly power users, are developing parasocial attachments or addiction to this non-human tool. I don’t call my friends. I text. ¯\(°_o)/¯

I loved my car. Just had to scrap it recently. I got sad. I didnt go through withdrawal symptoms or feel like i was mourning a friend. You can appreciate something without building an emotional dependence on it. Im not particularly surprised this is happening to some people either, wspecially with the amount of brainrot out there surrounding these LLMs, so maybe bizarre is the wrong word , but it is a little disturbing that people are getting so attached to so.ething that is so fundamentally flawed.

Sorry about your car! I hate that.

In an age where people are prone to feeling isolated & alone, for various reasons…this, unfortunately, is filling the void(s) in their life. I agree, it’s not healthy or best.

We called our old Honda Odyssey the Batmobile, because we got it on Halloween day and stopped at a novelty store where we got some flappy rubber bats for house decoration. On the way home I laid one of them on the dashboard and boom, the car got its name. The Batmobile was part of the family for more than 20 years, through thick and thin, never failing to get us where we needed to go. My daughter and I both cried when it was finally towed away to a donation place. Personifying inanimate objects and developing an emotional attachment for them is absolutely normal. I even teared up a little just typing this.

What the Hell was the name of the movie with Tom Cruise where the protagonist’s friend was dating a fucking hologram?

We’re a hair’s-breadth from that bullshit, and TBH I think that if falling in love with a computer program becomes the new defacto normal, I’m going to completely alienate myself by making fun of those wretched chodes non-stop.

Supporting neurodivergence, unlike some conformity-obsessed bigots. Just not always.

Yes, it says the neediest people are doing that, not simply “people who who use ChatGTP a lot”. This article is like “Scientists warn civilization-killer asteroid could hit Earth” and the article clarifies that there’s a 0.3% chance of impact.

You never viewed a tool as a friend? Pretty sure there are some guys that like their cars more than most friends. Bonding with objects isn’t that weird, especially one that can talk to you like it’s human.

This reminds me of the pang I felt when I recently discovered my trusty heavy-duty crowbar aka “Mister Crowbar” had disappeared. Presumably some guys we hired to work on our deck walked off with it. When I was younger and did all my remodel work myself, I did a lot of demolition with my li’l buddy. He was pretty heavy and only came out for the really tough jobs. I hope he’s having fun somewhere.

now replace chatgpt with these terms, one by one:

- the internet

- tiktok

- lemmy

- their cell phone

- news media

- television

- radio

- podcasts

- junk food

- money

You go down a list of inventions pretty progressively, skimming the best of the last decade or two, then TV and radio… at a century or at most two.

Then you skip to currency, which is several millenia old.

They’re clearly under the control of Big Train, Loom Lobbyists and the Global Gutenberg Printing Press Conspiracy.

Hell, the written word destroyed untold generations of oral history.

“Modern Teens Killing Travelling Minstrel Industry”

Its too bad that some people seem to not comprehend all chatgpt is doing is word prediction. All it knows is which next word fits best based on the words before it. To call it AI is an insult to AI… we used to call OCR AI, now we know better.

LLM is a subset of ML, which is a subset of AI.

I knew a guy I went to rehab with. Talked to him a while back and he invited me to his discord server. It was him, and like three self trained LLMs and a bunch of inactive people who he had invited like me. He would hold conversations with the LLMs like they had anything interesting or human to say, which they didn’t. Honestly a very disgusting image, I left because I figured he was on the shit again and had lost it and didn’t want to get dragged into anything.

Jesus that’s sad

Yeah. I tried talking to him about his AI use but I realized there was no point. He also mentioned he had tried RCs again and I was like alright you know you can’t handle that but fine… I know from experience you can’t convince addicts they are addicted to anything. People need to realize that themselves.

Not all RCs are created equal. Maybe his use has the same underlying issue as the AI friends: problems in his real life and now he seeks simple solutions

I’m not blindly dissing RCs or AI, but his use of it (as the post was about people with problematic uses of this tech I just gave an example). He can’t handle RCs historically, he slowly loses it and starts to use daily. We don’t live in the same country anymore and were never super close so I can’t say exactly what his circumstances are right now.

I think many psychadelics at the right time in life and the right person can produce lifelasting insight, even through problematic use. But he literally went to rehab because he had problems due to his use. He isn’t dealing with something, that’s for sure. He doesn’t admit it is a problem either which bugs me. It is one thing to give up and decide to just go wild, another to do it while pretending one is in control…

I don’t know how people can be so easily taken in by a system that has been proven to be wrong about so many things. I got an AI search response just yesterday that dramatically understated an issue by citing an unscientific ideologically based website with high interest and reason to minimize said issue. The actual studies showed a 6x difference. It was blatant AF, and I can’t understand why anyone would rely on such a system for reliable, objective information or responses. I have noted several incorrect AI responses to queries, and people mindlessly citing said response without verifying the data or its source. People gonna get stupider, faster.

I don’t know how people can be so easily taken in by a system that has been proven to be wrong about so many things

Ahem. Weren’t there an election recently, in some big country, with uncanny similitude with that?

Yeah. Got me there.

I like to use GPT to create practice tests for certification tests. Even if I give it very specific guidance to double check what it thinks is a correct answer, it will gladly tell me I got questions wrong and I will have to ask it to triple check the right answer, which is what I actually answered.

And in that amount of time it probably would have been just as easy to type up a correct question and answer rather than try to repeatedly corral an AI into checking itself for an answer you already know. Your method works for you because you have the knowledge. The problem lies with people who don’t and will accept and use incorrect output.

Well, it makes me double check my knowledge, which helps me learn to some degree, but it’s not what I’m trying to make happen.

That’s why I only use it as a starting point. It spits out “keywords” and a fuzzy gist of what I need, then I can verify or experiment on my own. It’s just a good place to start or a reminder of things you once knew.

An LLM is like taking to a rubber duck on drugs while also being on drugs.